This manual covers the installation of Develocity on Amazon’s Elastic Kubernetes Service.

Time to completion: 45 minutes

Develocity is a Kubernetes-based application, distributed as a Helm chart. Helm is a package manager for Kubernetes applications. Develocity can generally be installed on Kubernetes clusters running modern Kubernetes versions. Compatibility between versions of Kubernetes, Helm and the Develocity can be found here. Later versions may be compatible but have not been verified to work.

Helm manages all Develocity components.

Prerequisites

1. An AWS Account

An AWS paid account is required. Note that a free tier account is not sufficient.

This tutorial will not work on GovCloud accounts (us-gov regions). |

2. A Develocity License

If you have purchased Develocity or started a trial, you should already have a license file called develocity.license. Otherwise, you may request a Develocity trial license.

3. An AWS IAM User

Grant the user that will manage the instance the AmazonEC2FullAccess AWS managed policy.

To check the current user, run the following command:

aws sts get-caller-identityIf you are using AWS’s Cloud Shell (see section 1. AWS CLI), grant the user Cloud Shell permissions using the AWSCloudShellFullAccess AWS managed policy.

| If you choose to use Amazon RDS as your database or S3 to store your build scans, you will need the additional permissions described in the appendices. |

The IAM user must have permissions to work with Amazon EKS IAM roles, service linked roles, AWS CloudFormation, a VPC, and related resources.

You will need the permissions described by eksctl’s minimum IAM policies.

Host Requirements

This section outlines cluster and host requirements for the installation.

1. Database

Develocity installations have two database options:

-

An embedded database that is highly dependent on disk performance.

-

A user-managed database that is compatible with PostgreSQL 12, 13, or 14, including Amazon RDS and Aurora.

By default, Develocity stores its data in a PostgreSQL database that is run as part of the application itself, with data being stored in a directory mounted on its host machine.

RDS Database

There are instructions for using Amazon RDS as a user-managed database in the RDS appendix. This can have a number of benefits, including easier resource scaling, backup management, and failover support.

2. Storage

In addition to the database, Develocity needs some storage capacity for configuration files, logs, and build cache artifacts. These storage requirements apply regardless of which type of database you use, although the necessary size varies based on the database type. The majority of data is stored in the "installation directory", which defaults to /opt/gradle (unless otherwise specific in your Helm values file below).

Capacity

The minimum capacity required for the installation directory for the embedded database case is 250 GB. The minimum capacity required for the installation directory for the user-managed database case is 30 GB. It is recommended to create a specific volume for the installation directory to avoid consuming the space required for Develocity, and to ensure at least 10% of the volume’s space is free at all times.

The following are additional disk capacity requirements:

| Location | Storage Size |

|---|---|

|

|

1 GB |

|

|

30 GB |

These are not particularly performance sensitive.

Performance

For production workloads, storage volumes should exhibit SSD-class disk performance of at least 3000 IOPS (input/output operations per second). Develocity is not compatible with network-based storage solutions due to limitations of latency and data consistency.

| Disk performance has a significant impact on Develocity performance. |

Object storage

Develocity administrators can store Build Scan data in an object storage service, such as Amazon S3. This can help performance in high-traffic installations by reducing the load on the database. Object storage services also offer performance and cost advantages compared to database storage. Gradle recommends using an object storage service for your installation if you deploy Develocity to a cloud provider or have an available internal S3-compatible object store. See Build Scan object storage in the Develocity Administration Manual for a description of the benefits and limitations.

| Develocity is not compatible with network-based storage solutions due to limitations of latency and data consistency. |

3. Network Connectivity

Develocity requires network connectivity for periodic license validation.

An installation of Develocity will not start if it cannot connect to both registry.gradle.com and harbor.gradle.com. |

It is strongly recommended that production installations of Develocity are configured to use HTTPS with a trusted certificate.

When installing Develocity, you will need to provide a hostname, such as develocity.example.com.

Pre-Installation

If you decide to use Cloud Shell, complete sections 3. Eksctl, 4. Helm, 5. Hostname and then skip to Cluster Configuration.

1. AWS CLI

You will be using the aws command line to provision and configure your server. To install it on your local machine, follow the instructions in the AWS documentation. Use version 2.11.26 or later or 1.27.150 or later.

The aws CLI must be configured with an access key to be able to access your AWS account. If you do not have an access key, follow the AWS CLI prerequisites guide, and then the quick setup guide.

If you have an access key already, but have not configured the aws CLI, you can follow the AWS CLI quick setup guide.

Choose the region you wish to install Develocity in. Pick the region geographically closest to you or to any pre-existing compute resources, such as CI agents, to ensure the best performance. AWS provides a list of all available EKS regions.

2. Kubectl

kubectl is a command line tool for working with Kubernetes clusters. Use version 1.26 or later corresponding with your cluster version.

AWS hosts their own binaries of kubectl, which you can install by following their guide.

You can also install kubectl by following the steps in the Kubernetes documentation.

3. Eksctl

eksctl is a CLI tool for creating and managing EKS clusters. While you can use the AWS CLI, eksctl is much easier to use.

To install it, follow AWS’s eksctl installation guide.

$ curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp$ sudo mv /tmp/eksctl /usr/local/binIf you are using Cloud Shell, replace the command above with:

$ mkdir -p ~/.local/bin && mv /tmp/eksctl ~/.local/bin4. Helm

Helm is a package manager for Kubernetes applications.

If you are using Cloud Shell, first run:

$ export HELM_INSTALL_DIR=~/.local/binIf for some reason you skipped the previous step, you will have to create the ~/.local/bin directory. |

Openssl is a requirement for Helm. Install it by running:

$ sudo yum install openssl -yAlternatively, if you don’t use yum, you can use sudo apt install openssl -y, brew install openssl, or your package manager of choice.

If you don’t want to install openssl, you can disable Helm’s installer checksum verification using export VERIFY_CHECKSUM=false. |

To install Helm, run:

$ curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash| See Helm’s installation documentation for more details and non-Linux instructions. |

5. Hostname

AWS will automatically assign a hostname like a6d2f554d845844a69a0aac243289712-4696594a57a75795.elb.us-west-2.amazonaws.com to the load balancer you create for Develocity. You can use this hostname to access Develocity.

If you want to access Develocity by a host name of your choosing (e.g. develocity.example.com), you will need the ability to create the necessary DNS record to route this name to the AWS-created hostname.

| We recommend using a custom hostname with AWS’s Route 53 instead of AWS’s generated hostname, since the hostname is unknown until Develocity is installed. |

Cluster Configuration

In this section you will create an EKS cluster to run Develocity.

1. Create a Cluster

Create your Amazon EKS cluster called develocity.

To create it, run:

$ eksctl create cluster --name develocity \

--nodes 3 \ (1)

--instance-types=m5.xlarge \ (2)

--region us-west-1 (3)| 1 | Recommended node group specification: three nodes with 4 CPU’s and 16GiB memory each |

| 2 | eg. m5.xlarge EC2 instances to fulfill requirements |

| 3 | Replace the region-code with your AWS Region of choice. |

[ℹ] creating EKS cluster "develocity" in "us-west-1" region with managed nodes [ℹ] building cluster stack "eksctl-develocitys-cluster" [ℹ] deploying stack "eksctl-develocitys-cluster" [✔] EKS cluster "develocitys" in "us-west-1" region is ready

This will take several minutes, and will add the cluster context to kubectl when it is done (note that this will persist across Cloud Shell sessions).

eksctl creates a CloudFormation stack, which you can see in the CloudFormation web UI.

| For more details, consult the eksctl getting started guide. |

2. Inspect Nodes

These are managed nodes that run Amazon Linux applications on Amazon EC2 instances.

| Develocity does not support Fargate nodes out of the box, because Fargate nodes do not support any storage classes by default. |

Once your cluster is up and running, you will be able to see the nodes:

$ kubectl get nodes -o wideNAME STATUS ROLES AGE ip-192-168-45-72.us-west-2.compute.internal Ready <none> 7m1s ip-192-168-72-77.us-west-2.compute.internal Ready <none> 6m58s ip-192-168-72-79.us-west-2.compute.internal Ready <none> 6m58s

You can also see the workloads running on your cluster.

$ kubectl get pods -A -o wideNAMESPACE NAME READY STATUS RESTARTS AGE IP NODE kube-system aws-node-12345 1/1 Running 0 7m43s 192.0.2.1 ip-192-0-2-1.region-code.compute.internal kube-system aws-node-67890 1/1 Running 0 7m46s 192.0.2.0 ip-192-0-2-0.region-code.compute.internal kube-system aws-node-45690 1/1 Running 0 7m50s 192.0.2.3 ip-192-0-2-3.region-code.compute.internal kube-system coredns-1234567890-abcde 1/1 Running 0 14m 192.0.2.5 ip-192-0-2-5.region-code.compute.internal kube-system coredns-1234567890-12345 1/1 Running 0 14m 192.0.2.4 ip-192-0-2-4.region-code.compute.internal kube-system kube-proxy-12345 1/1 Running 0 7m46s 192.0.2.0 ip-192-0-2-0.region-code.compute.internal kube-system kube-proxy-67890 1/1 Running 0 7m43s 192.0.2.1 ip-192-0-2-1.region-code.compute.internal kube-system kube-proxy-45690 1/1 Running 0 7m43s 192.0.2.3 ip-192-0-2-3.region-code.compute.internal

3. Create a Storage Class

This guide uses the embedded database. You may have a different setup depending on your Helm values file.

| Instructions for using Amazon RDS as a user-managed database in the appendix. |

To use EBS volumes, you need to install the EBS CSI driver.

First, enable OIDC for your cluster and create a service account for the driver to use:

$ eksctl utils associate-iam-oidc-provider --cluster develocity --approve$ eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster develocity \

--attach-policy-arn "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy" \

--approve \

--role-only \

--role-name eksctl-managed-AmazonEKS_EBS_CSI_DriverRoleThen install the driver:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

$ eksctl create addon \

--name aws-ebs-csi-driver \

--cluster develocity \

--force \

--service-account-role-arn "arn:aws:iam::${ACCOUNT_ID}:role/eksctl-managed-AmazonEKS_EBS_CSI_DriverRole"| For more details on installing and managing the EBS CSI driver, see AWS’s documentation. |

To use gp3 volumes, first add a gp3 storage class to the cluster. Create the manifest file:

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: gp3

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3Then run the command to apply it:

kubectl apply -f gp3.yamlFor more details on the options available for EBS volumes using the CSI driver, see the driver’s GitHub project, specifically the StorageClass parameters documentation. |

The Build Scan® service of Develocity can be configured to store the data in a Amazon S3 bucket. This can help performance in high-traffic installations by taking load off the database. See the appendix for details.

4. Install Ingress Controller

In this tutorial, we will use the AWS Load Balancer Controller to automatically manage AWS Application Load Balancer (ALB) objects.

| The following are condensed instructions from Installing the AWS Load Balancer Controller add-on. |

First, we create some required AWS resources:

$ curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.7.2/docs/install/iam_policy.json

$ aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy \

--policy-document file://iam_policy.json

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

$ eksctl create iamserviceaccount \

--cluster=develocity \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::${ACCOUNT_ID}:policy/AWSLoadBalancerControllerIAMPolicy \

--approveThen install the AWS Load Balancer Controller:

$ helm repo add eks https://aws.github.io/eks-charts

$ helm repo update eks

$ helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=develocity \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controllerFinally, check whether the controller has been successfully installed:

$ kubectl get deployment -n kube-system aws-load-balancer-controllerNAME READY UP-TO-DATE AVAILABLE AGE aws-load-balancer-controller 2/2 2 2 27s

Installation

In this section you will install Develocity on your newly created instance.

1. Prepare a Helm values file

Installation options for Develocity are depicted in a Helm values file.

Follow the instructions in the Kubernetes Helm Chart Configuration Guide and return to this document with a complete values.yaml file.

For the usage of ALB as ingress controller, you require these additional values:

global:

externalSSLTermination: true (1)

ingress:

ingressClassName: alb

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}]'

alb.ingress.kubernetes.io/ssl-redirect: '443' (2)

alb.ingress.kubernetes.io/target-type: ip

grpc: (3)

serviceAnnotations:

alb.ingress.kubernetes.io/success-codes: '12'

alb.ingress.kubernetes.io/backend-protocol-version: GRPC

alb.ingress.kubernetes.io/healthcheck-path: /

alb.ingress.kubernetes.io/healthcheck-port: '6011'

http:

serviceAnnotations:

alb.ingress.kubernetes.io/backend-protocol-version: HTTP1

alb.ingress.kubernetes.io/healthcheck-path: /ping

alb.ingress.kubernetes.io/healthcheck-port: '9080'| 1 | ALB terminates SSL connections |

| 2 | All HTTP traffic is redirected to HTTPS by the load balancer |

| 3 | Required for usage with Bazel |

| You can use AWS’s certificate management service to manage a trusted SSL certificate and provide it to your ingress. To use it, configure the ingress controller service using AWS’s annotations, and configure Develocity for external SSL termination. Note that this requires using a hostname you own. |

This tutorial assumes you use a valid, self-signed certificate for the used hostname in AWS Certificate Manager like this:

mkcert develocity.example.com

aws acm import-certificate --certificate file://develocity.example.com.pem --private-key file://develocity.example.com-key.pemNow ALB can auto discovered the certificate.

| Please consult Connecting to Develocity for using a self-signed certificate with the Develocity Gradle plugin. |

2. Install the Helm chart

Add the https://helm.gradle.com/ helm repository and update it:

$ helm repo add gradle https://helm.gradle.com/$ helm repo update gradle3. Install Develocity

Run helm install with the following command:

$ helm install \

--create-namespace --namespace develocity \

develocity \

gradle/gradle-enterprise \

--values path/to/values.yaml \(1)

--set-file global.license.file=path/to/develocity.license (2)You should see output similar to this:

NAME: develocity LAST DEPLOYED: Wed Jul 13 04:08:35 2022 NAMESPACE: develocity STATUS: deployed REVISION: 1 TEST SUITE: None

4. Start Develocity

You can see the status of Develocity starting up by examining its pods.

$ kubectl --namespace develocity get podsNAME READY STATUS RESTARTS AGE gradle-enterprise-operator-76694c949d-md5dh 1/1 Running 0 39s gradle-monitoring-5545d7d5d8-lpm9x 1/1 Running 0 39s gradle-database-65d975cf8-dk7kw 0/2 Init:0/2 0 39s gradle-build-cache-node-57b9bdd46d-2txf5 0/1 Init:0/1 0 39s gradle-proxy-0 0/1 ContainerCreating 0 39s gradle-metrics-cfcd8f7f7-zqds9 0/1 Running 0 39s gradle-test-distribution-broker-6fd84c6988-x6jvw 0/1 Init:0/1 0 39s gradle-keycloak-0 0/1 Pending 0 39s gradle-enterprise-app-0 0/1 Pending 0 39s

Eventually the pods should all report as Running:

$ kubectl --namespace develocity get podsNAME READY STATUS RESTARTS AGE gradle-enterprise-operator-76694c949d-md5dh 1/1 Running 0 4m gradle-monitoring-5545d7d5d8-lpm9x 1/1 Running 0 4m gradle-proxy-0 1/1 Running 0 3m gradle-database-65d975cf8-dk7kw 2/2 Running 0 3m gradle-enterprise-app-0 1/1 Running 0 3m gradle-metrics-cfcd8f7f7-zqds9 1/1 Running 0 3m gradle-test-distribution-broker-6fd84c6988-x6jvw 1/1 Running 0 3m gradle-build-cache-node-57b9bdd46d-2txf5 1/1 Running 0 4m gradle-keycloak-0 1/1 Running 0 3m

5. Configure the Hostname

If you intend to use a custom hostname to access your Develocity instance, you now need to add the appropriate DNS records.

You can find the ALB generated hostname by describing your ingress:

kubectl get ingress --namespace develocity -o jsonpath='{.items[0].status.loadBalancer.ingress[0].hostname}'yielding something like:

abcdefg123456789-123456789.elb.us-west-2.amazonaws.com

Add a CNAME record for your hostname that points to the public hostname of your ALB.

develocity.example.com CNAME abcdefg123456789-123456789.elb.us-west-2.amazonaws.comYou should verify that your DNS record works correctly, such as by using dig develocity.example.com.

Alternatively, you can use the hostname generated by AWS. In this case you have to update your helm values by running

$ helm upgrade \

--namespace develocity \

develocity \

gradle/gradle-enterprise \

--reuse-values \

--set global.hostname=abcdefg123456789-123456789.elb.us-west-2.amazonaws.comand update the imported certificate with abcdefg123456789-123456789.elb.us-west-2.amazonaws.com as a common or subject alternative name.

| You can use AWS’s DNS Service, Route 53, to easily route traffic to your ALB by following this guide. |

If you are installing Develocity in a highly available setup, we recommend submitting a ticket at support.gradle.com[support.gradle.com] for assistance.

Develocity has a /ping endpoint, which can be used to verify network connectivity with Develocity.

Connectivity to Develocity installation can be tested by running the following command on machines which need to connect to Develocity:

$ curl -sw \\n --fail-with-body --show-error https://«develocity-host»/pingIt should return SUCCESS.

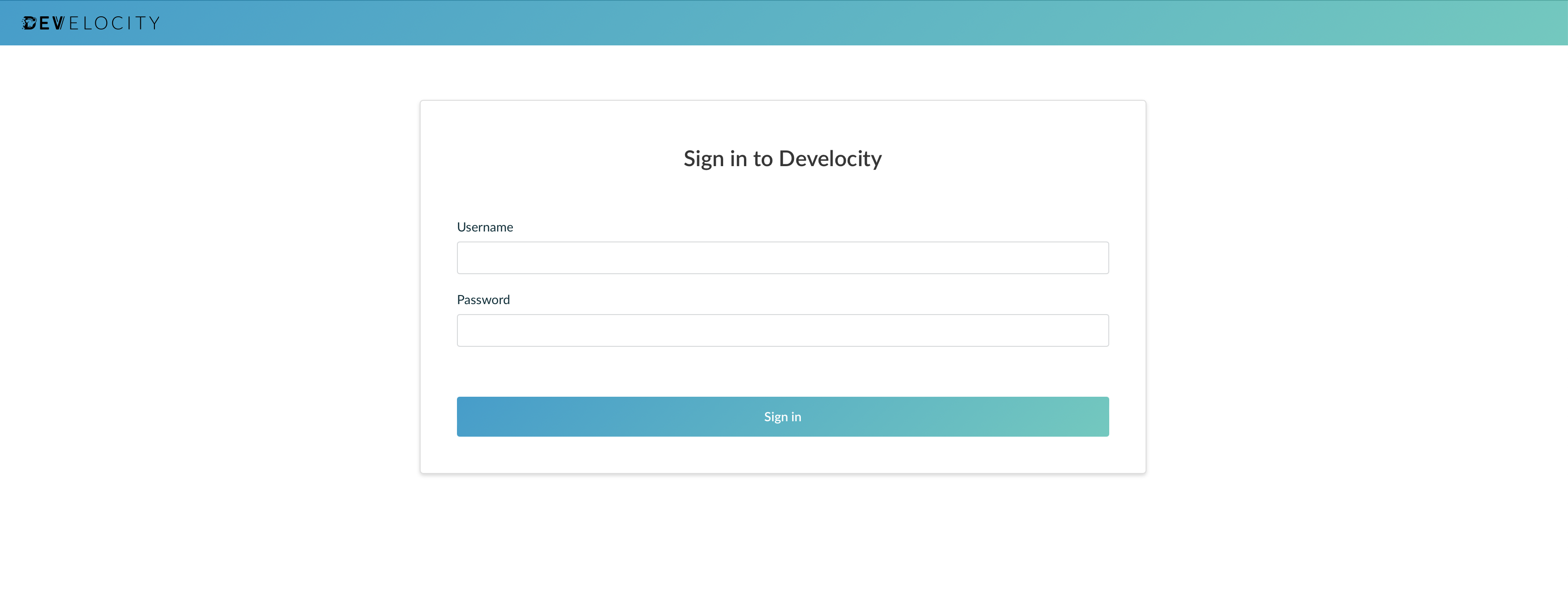

Once all pods have a status of Running and the system is up and connected, you can interact with it by visiting its URL in a web browser (i.e. the hostname).

Develocity is installed and running.

If you have decided to use a self-signed certificate, then you need to add this certificate to SSL trust in Develocity Administration. To do so, visit https://«develocity-host»/admin/network/ssl-trust and enter the certificate from 4. Install Ingress Controller.

Post-Installation

Many features of Develocity, including access control, database backups, and Build Scan retention can be configured in Develocity, once it is running. Consult the Develocity Administration guide to learn more.

For instructions on how to start using Develocity in your builds, consult the Getting Started with Develocity for Gradle users guide.

Appendix

Appendix A: Using Amazon RDS

This appendix will walk you through using an Amazon RDS PostgreSQL instance as your database.

1. Obtain the Required Permissions

You need permission to create and manage Amazon RDS instances and security groups.

The necessary permissions are granted using the AmazonRDSFullAccess AWS managed policy.

2. Set up an RDS Instance

Develocity is compatible with PostgreSQL 12, 13, or 14. The minimum storage space required is 250 GB with 3,000 or more IOPS.

A. Create a root username and password

Create a root username and password for the database instance, refered to below as «db-root-username» and «db-root-password», respectively. These are the credentials you will use for your database connection; save them somewhere secure.

B. Create a security group and enable ingress

Before creating the database, you have to create a security group in the VPC you want to use.

In this tutorial you will use the eksctl created VPC used by your cluster.

You can use a different VPC, but you will need to make the RDS instance accessible from your cluster (e.g. by peering the VPCs).

To create the security group, run:

$ CLUSTER_VPC_ID=$(

aws ec2 describe-vpcs \

--filters Name=tag:aws:cloudformation:stack-name,Values=eksctl-develocity-cluster \

--query Vpcs[0].VpcId \

--output text

)$ aws ec2 create-security-group --group-name ge-db-sg \

--description "Develocity DB security group" \

--vpc-id ${CLUSTER_VPC_ID}Then enable ingress to the RDS instance from your cluster for port 5432 by running:

$ CLUSTER_SECURITY_GROUP_ID=$(

aws eks describe-cluster --name develocity \

--query cluster.resourcesVpcConfig.clusterSecurityGroupId --output text

)$ RDS_SECURITY_GROUP_ID=$(

aws ec2 describe-security-groups \

--filters Name=group-name,Values=ge-db-sg \

--query 'SecurityGroups[0].GroupId' --output text

)$ aws ec2 authorize-security-group-ingress \

--protocol tcp --port 5432 \

--source-group ${CLUSTER_SECURITY_GROUP_ID} \

--group-id ${RDS_SECURITY_GROUP_ID}C. Create a subnet group

Before creating the database, you need to create a subnet group to specify how the RDS instance will be networked.

This subnet group must have subnets in two availability zones, and typically should use private subnets.

eksctl has already created private subnets you can use.

Create a subnet group containing them by running:

$ CLUSTER_VPC_ID=$(

aws ec2 describe-vpcs \

--filters Name=tag:aws:cloudformation:stack-name,Values=eksctl-develocity-cluster \

--query Vpcs[0].VpcId \

--output text

)$ SUBNET_IDS=$(

aws ec2 describe-subnets \

--query 'Subnets[?!MapPublicIpOnLaunch].SubnetId' \

--filters Name=vpc-id,Values=${CLUSTER_VPC_ID} --output text

)$ aws rds create-db-subnet-group --db-subnet-group-name ge-db-subnet-group \

--db-subnet-group-description "Develocity DB subnet group" \

--subnet-ids ${SUBNET_IDS}| Consult RDS’s subnet group documentation for more details on subnet groups and their requirements. |

D. Create the RDS instance

Create the RDS instance:

$ RDS_SECURITY_GROUP_ID=$(

aws ec2 describe-security-groups \

--filters Name=group-name,Values=ge-db-sg \

--query 'SecurityGroups[0].GroupId' --output text

)$ aws rds create-db-instance \

--engine postgres \

--engine-version 14.3 \

--db-instance-identifier develocity-database \

--db-name gradle_enterprise \

--allocated-storage 250 \(1)

--iops 3000 \(2)

--db-instance-class db.m5.large \

--db-subnet-group-name ge-db-subnet-group \

--backup-retention-period 3 \(3)

--no-publicly-accessible \

--vpc-security-group-ids ${RDS_SECURITY_GROUP_ID} \

--master-username «db-root-username» \

--master-user-password «db-root-password»| 1 | Develocity should be installed with 250GB of database storage to start with. |

| 2 | Develocity’s data volumes and database should support at least 3,000 IOPS. |

| 3 | The backup retention period, in days. |

While you don’t configure it here, RDS supports storage autoscaling.

| Consult AWS’s database creation guide and the CLI command reference for more details on RDS instance creation. |

You can view the status of your instance with:

$ aws rds describe-db-instances --db-instance-identifier develocity-databaseWait until the DBInstanceStatus is available.

You should then see the hostname of the instance under Endpoint. This is the address you will use to connect to the instance, subsequently referred to as «database-address».

3. Configure Develocity with RDS

Add the following configuration snippet to your Helm values file:

database:

location: user-managed

connection:

host: «database-address»

databaseName: gradle_enterprise

credentials:

superuser:

username: «db-root-username»

password: «db-root-password»You can substitute «database-address» in the Helm values file by running (verbatim):

$ DATABASE_ADDRESS=$(

aws rds describe-db-instances \

--db-instance-identifier develocity-database \

--query 'DBInstances[0].Endpoint.Address' \

--output text

)$ sed -i "s/«database-address»/${DATABASE_ADDRESS}/g" path/to/values.yaml| The superuser is only used to set up the database and create migrator and application users. You can avoid using the superuser by setting up the database yourself, as described in the Database options section of Develocity’s installation manual. Please contact Gradle support for help with this. |

This action embeds your database superuser credentials in your Helm values file. It must be kept secure. If you prefer to provide the credentials as a Kubernetes secret, consult Develocity’s Database options.

Appendix B: Storing Build Scans in S3

This appendix will walk you through using an Amazon S3 bucket to store Build Scans®.

1. Obtain the required permissions

You will need permission to create and manage Amazon S3 buckets. You also need to create IAM policies, roles, and instance profiles, but you already have permission to do that from the eksctl policies.

The necessary permissions can be easily granted by using the AmazonS3FullAccess AWS managed policy.

2. Set up a S3 Bucket and Allow Access

Create an S3 bucket and create an IAM policy that allows access to it. Then, associate that policy with your EC2 instance.

A. Create a S3 bucket

To create the S3 bucket, run:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)$ aws s3 mb s3://develocity-${ACCOUNT_ID} (1)| 1 | S3 bucket names must be unique across all AWS accounts, within groups of regions. We recommend using your account ID as a suffix. |

| If you have multiple installations of Develocity you want to use S3 storage with, either add a suffix or use the same bucket with a different scans object prefix. |

B. Create a policy allowing bucket access

To create a role allowing access to your bucket, first create a policy.json file with the following content:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::develocity-«account-id»"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:AbortMultipartUpload"

],

"Resource": [

"arn:aws:s3:::develocity-«account-id»/*"

]

}

]

}Then run the following commands:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)$ sed -i "s/«account-id»/${ACCOUNT_ID}/g" ./policy.json (1)$ aws iam create-policy \

--policy-name "eksctl-develocity-access" \ (1)

--policy-document file://policy.json (2)| 1 | Even though we aren’t using eksctl to create this policy, using the eksctl- prefix avoids the need for additional permissions. |

| 2 | The policy.json file you created. |

C. Create a role for EKS

To associate the service account with an AWS IAM role, we need to use an AWS OIDC provider.

We already installed one when setting up the EBS CSI driver, so we can use it here.

To create a role that can be used from EKS and uses the policy you just created, run:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)$ POLICY_ARN="arn:aws:iam::${ACCOUNT_ID}:policy/eksctl-develocity-access"$ eksctl create iamserviceaccount \

--name develocity-app \

--namespace develocity \

--cluster develocity \

--approve \

--role-only \

--role-name eksctl-managed-Develocity_BuildScans_S3_Role \

--attach-policy-arn ${POLICY_ARN}3. Update your Helm Values File

You need to configure Develocity to use the role you created. You also need to increase Develocity’s memory request and limit.

These are both done by adding the following to your Helm values file:

enterprise:

resources:

requests:

memory: 6Gi (1)

limits:

memory: 6Gi (1)

serviceAccount:

annotations:

"eks.amazonaws.com/role-arn": "arn:aws:iam::«account-id»:role/eksctl-managed-Develocity_BuildScans_S3_Role" (2)| 1 | If you have already set a custom value here, instead increase it by 2Gi. |

| 2 | «account-id» is the ID of your AWS account, which you will substitute in a moment. |

| When adding items to your Helm values file, merge any duplicate blocks. |

Then substitute «account-id» in the Helm values file by running:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

$ sed -i "s/«account-id»/${ACCOUNT_ID}/g" path/to/values.yaml| You may need to scale up your cluster or use nodes with more memory to be able to satisfy the increased memory requirements. See section 1. Create a Cluster for scaling instructions. |

If you are additionally using the background processor component, you should also add the same service account annotation to it:

enterpriseBackgroundProcessor:

resources:

requests:

memory: 6Gi (1)

limits:

memory: 6Gi (1)

serviceAccount:

annotations:

"eks.amazonaws.com/role-arn": "arn:aws:iam::«account-id»:role/eksctl-managed-Develocity_BuildScans_S3_Role" (2)| 1 | If you have already set a custom value here, instead increase it by 2Gi. |

| 2 | «account-id» is the ID of your AWS account, which you will substitute in a moment. |

Substitute «account-id» in the Helm values file by running:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

$ sed -i "s/«account-id»/${ACCOUNT_ID}/g" path/to/values.yaml-

Permissions when the background processor is enabled

If you have enabled the background processor component, we recommend creating separate roles with different attached IAM policies for the enterprise and enterpriseBackgroundProcessor components, so that each pod in the installation has no more permissions than it needs.

If enterpriseBackgroundProcessor is enabled in values.yaml:

-

the policy attached to the role of

enterprisedoes not requires3:DeleteObjectpermission -

the policy attached to the role of

enterpriseBackgroundProcessorrequires thes3:DeleteObjectpermission

If enterpriseBackgroundProcessor pod is not enabled in values.yaml:

-

the policy attached to the role of

enterpriserequires thes3:DeleteObjectpermission.

See Appendix A: Background processing configuration in the Develocity Kubernetes Helm Chart Configuration Guide for more information about the background processor component.

4. Configure Build Scans with S3

Develocity must now be configured to use S3. To do this, you must use the unattended configuration mechanism.

Develocity can store Build Scans in either the configured database or in the configured object store. The unattended configuration mechanism lets you configure which of these is used to store Build Scans as part of a configuration file, which can be embedded in your Helm values file as described in the unattended configuration guide.

This section will describe how to extend your Helm values file to include the correct unattended configuration block for S3 Build Scan storage.

First we need to create a minimal unattended configuration file. This requires you to choose a password for the system user and hash it. To do this, install the Develocity Admin CLI.

Then run:

$ develocityctl config-file hash -o secret.txt -s -To hash your password from stdin and write it to secret.txt. We will refer to the hashed password as «hashed-system-password».

To use your S3 bucket, add the following to your Helm values file:

global:

unattended:

configuration:

version: 9 (1)

systemPassword: "«hashed-system-password»" (2)

buildScans:

incomingStorageType: objectStorage

advanced:

app:

heapMemory: 5632 (3)

objectStorage:

type: s3

s3:

bucket: gradle-enterprise-build-scans-«account-id» (4)

region: «region» (5)

credentials:

source: environment (6)| 1 | The version of the unattended configuration. |

| 2 | Your hashed system password. |

| 3 | If you have already set a custom value here, instead increase it by 2048. |

| 4 | Your account ID, which we will substitute in below. |

| 5 | The region where your S3 bucket resides, which should be your current region. Viewable by running aws configure list | grep region. |

| 6 | The source for AWS credentials, in this example the execution environment, as discussed in Credentials sourced from the execution environment. |

Then substitute «account-id» in the Helm values file by running:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

$ sed -i "s/«account-id»/${ACCOUNT_ID}/g" path/to/values.yamlOnce you have updated your Helm values file as described above, you need to reapply it using the method described in Changing Configuration Values. This will update your Develocity installation to use the unattended configuration you created above, and Develocity will restart.

5. Verify S3 Storage is Used

| Develocity will start even if your S3 configuration is incorrect. |

To confirm that Develocity is storing incoming Build Scans in S3 and also able to read Build Scan data from S3, you should first upload a new Build Scan to your Develocity instance. Second, confirm that you can view the Build Scan. Finally, confirm that the Build Scan is stored in your S3 bucket, by running:

$ ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)$ aws s3 ls s3://develocity-${ACCOUNT_ID}/build-scans/ \(1)

--recursive --human-readable --summarize| 1 | If you used a custom prefix, use it here instead of build-scans. |

2022-09-27 19:11:06 6.6 KiB build-scans/2022/09/27/aprvi3bnnxyzm Total Objects: 1 Total Size: 6.6 KiB